tripledip

selected projects by Ben Heasly and tripledip

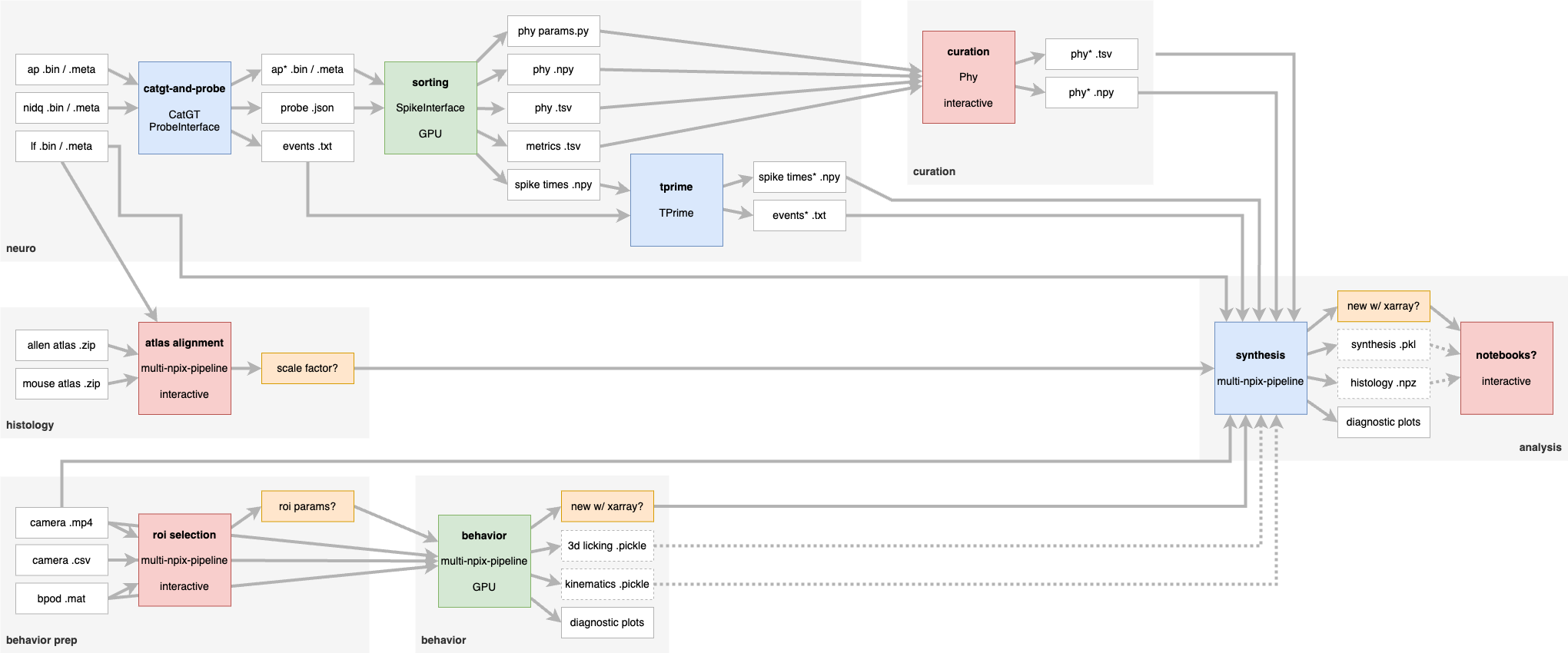

Lab Pipelines

This is a contract project with the Machado Lab at the University of Pennsylvania.

The Machado lab has an existing, successful analysis pipeline for neurophysiology, histology, and behavioral data using tools like Python, Jupyter, Kilosort, Phy, and U-Net.

Together, we are refactoring the pipeline as a collection of explicit, modular, repeatable steps, and integrating these steps using Proceed (as below).

We are using Docker to make each step and its configuration portable and continuously tested via GitHub Actions. With the NVIDIA Container Toolkit we are able to run machine learning tools, including Kilosort and U-Net, with GPU support in various environments including locally, on Git Hub Actions, and on Google Cloud Compute Engine.

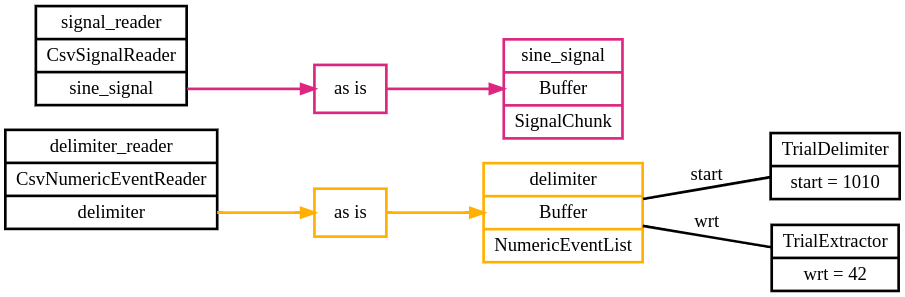

Pyramid

This is a contract project with the Gold Lab at the University of Pennsylvania.

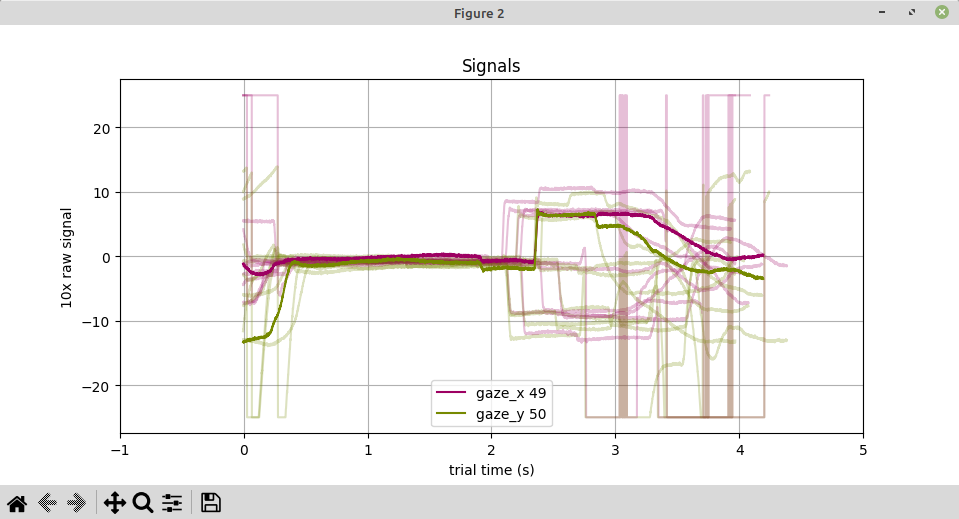

Pyramid is a Python framework for integrating, aligning, delimiting, and enhancing data from electrophysiology and behavioral experiments.

Pyramid defines readers to consume data from various sources including CSV files, Plexon recordings, Open Ephys / ZMQ, Phy files, and potentially many more. All of the data are read into a simple data model called the Neutral Zone, consisting of numeric event lists and sampled signal chunks, both based on NumPy arrays.

Pyramid watches for delimiting events and uses these to partition data into trials — a key concept for analysis in the neuroscience domain. Data within a trial are aligned and can be further enhanced by standard or custom code. Trials are written to disk as JSON Lines or HDF5 and can be plotted using standard and custom plotters written with Matplotlib.

For a given experiment, all of the above is declared in a single YAML, file allowing configuration to be human-readable, sharable, and versionable. The declarative style also allows Pyramid to create graphs of experiment configuration using Graphviz.

steps: - name: part_1 image: ubuntu command: echo "hello"- name: part_2 image: alpine command: echo "world"

Proceed

This is a contract project with the Gold Lab at the University of Pennsylvania.

Proceed is a Python library and CLI tool for declarative batch processing. It reads a Pipeline specification declared in YAML. A pipeline contains a list of steps that are based on Docker images and containers.

Each execution produces an execution record that accounts for accepted arg values, step logs, and checksums of input and output files.

Proceed aims to express processing pipelines in a nothing up my sleeves way. The YAML specification should be complete enough to share and reproduce, and the execution record should allow for auditing of expected outcomes.

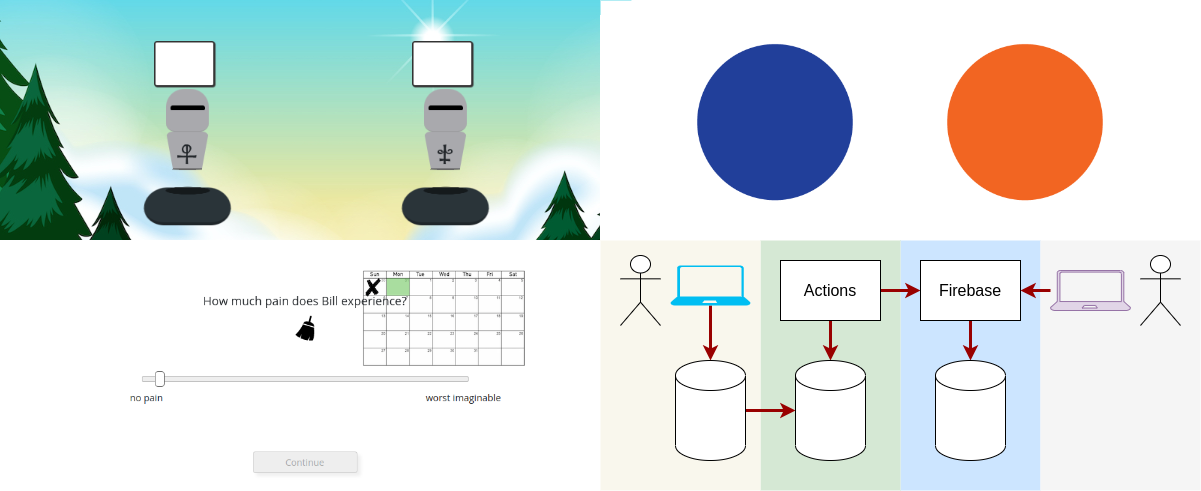

Honeycomb

This was a contract project with the Brown University Learning Memory and Decision Lab and Center for Computation and Visualization.

Honeycomb is a project template developed at Brown CCV enabling jsPsych tasks to be developed and deployed to several environments including local browser, standalone desktop executable via Electron Forge, and public web app via Firebase. It uses GitHub Actions to make builds and deploys automatic, repeatable, and traceable.

For this project we upgraded several Honeycomb dependencies including React and Webpack v3 to v5, and jsPsych v6 to v7. We also held an in-person training workshop aimed at getting users up and running.

MGL Metal

This is a contract project with Stanford University, supported by the NIH and Mathworks.

MGL has been a tried-and-true toolbox for psychophysics research, exposing OpenGL functionality to MATLAB by way of C functions.

Many MGL projects run on OS X / macOS, but recent versions of macOS have removed OpenGL support, leaving users in a tight spot.

For the MGL Metal project we are rewriting MGL’s graphics functionality using Apple’s new Metal API, along with Xcode, Swift, and C.

In addition to reimplementing existing functionality, we’ve made an explicit contract between the MATLAB client and the graphics service including socket communication, data serialization/deserialization, and a command protocol. This contract supports robust testing and may support other graphics back-end implementations, for example with Vulkan.

A previous version of MGL was used as part of the Snow Dots project, mentioned below.

Fall Free

Fall Free is a hobby project and a game I want to release. The game itself is about space exploration, inspired by the classic Solar Jetman. I’m developing with Kotlin and libGDX.

For the game’s engine, I’ve been interested in modeling rotating, rigid bodies. I used the SymPy computer algebra system to apply conservation laws and solve for elastic and inelastic collisions of bodies.

I’m also interested in how to predict intersections, as opposed to detecting intersections that have already happened. This led to interesting algebra, trig, and numerical issues, including fast, accurate solvers for quadratic and quartic polynomials.

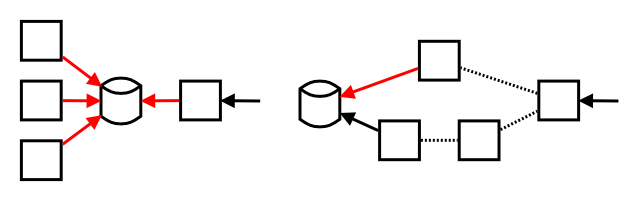

Authorizer Modernization

This project was for a financial tech startup that was acquired by a larger bank while I was an employee.

We needed to upgrade a transaction authorization API for larger scale, availability, robustness, and supportability. An existing legacy service connected directly to a monolith database and contended for resources like locks and id sequences with other processes like batch processing and a servicing UI.

I designed and led the development for a new approach that decomposed the legacy authorizer into micro-services using Java, Kotlin, Spring Boot, and Kubernetes. We integrated the micro-services using Kafka messaging, allowing them to be horizontally scalable and only loosely coupled to the back end database.

Statement Modernization

This was another project for the same financial tech startup that was acquired by a larger bank while I was an employee.

We needed to upgrade the customer statement generation batch process for scale, robustness, and supportability. The previous legacy process was based on brittle database stored procedures, shell scripts, file system locations, two-way SFTP integrations, and numerous undocumented dependencies among all of these. This process was unsupportable by all but a few “heroic experts”.

I helped design and implement a replacement process as a scratch rewrite using Java and Spring Boot for services, JDBC, Postgres, and cloud storage for persistence, and HTTP and SFTP for integration. We created an explicit ETL process to decouple the statement data processing and document rendering from live operations.

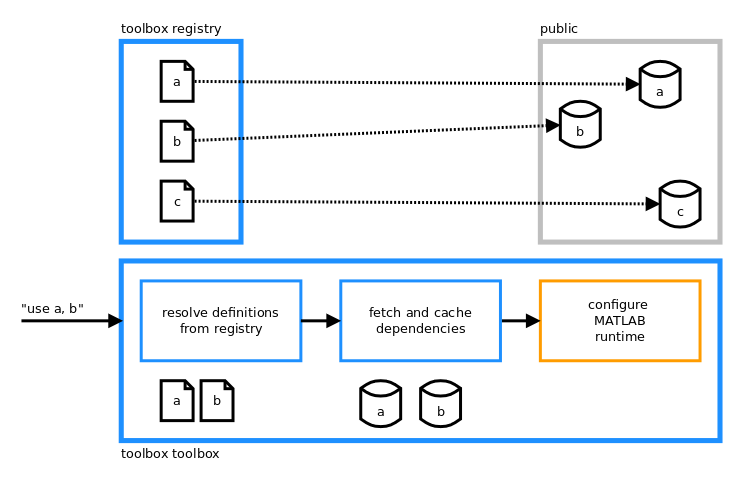

ToolboxToolbox and ToolboxHub

I started ToolboxToolbox and ToolboxRegistry as open-source tools while I was an employee at the University of Pennsylvania.

The aim was to simplify and automate dependency management and runtime configuration for MATLAB projects. This makes projects reproducible which in turn supports collaboration and automated testing.

The starting place was manual, ad-hoc, and bespoke solutions for obtaining and distributing dependencies and configuring the MATLAB runtime to find them. Without consistent conventions or tools, it was a challenge for users to switch between projects or compose complementary libraries.

Inspired by enterprise tools like Homebrew and Apache Maven, ToolboxToolbox establishes a convention for describing dependencies in JSON files, a public Git registry called ToobloxHub for sharing well-known dependencies, and a client tool for fetching dependencies by name and configuring the MATLAB runtime.

One user remarked, "We didn't think we needed this, but now we can't imagine life without it!"

RenderToolbox

I contributed to the RenderToolbox open-source tool while I was an employee at the University of Pennsylvania.

RenderToolbox aimed to facilitate working with physically-based renderers like PBRT and Mitsuba. It defined a MATLAB-based workflow for declarative batch processing of scene data, including Blender, Collada, and Assimp, and image data including multi-spectral and sRGB.

We produced a Journal of Vision methods paper aimed at sharing the tool with collaborators.

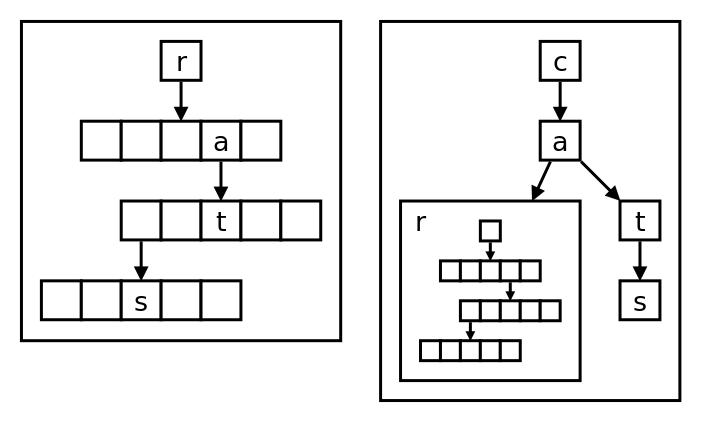

Cache-conscious hash table and HAT-trie

This was a contract project for a tech startup where I implemented data structures in Java. The cache-conscious hash table used Java arrays to improve data locality and exploit CPU cache pre-fetching, in order to achieve faster performance than standard Java collections. The HAT-trie data structure supported prefix indexing of enterprise data, and incorporated the cache-conscious hash table as a component in order to reduce the number of nodes loaded into memory at a time.

PODS OpenHDS System

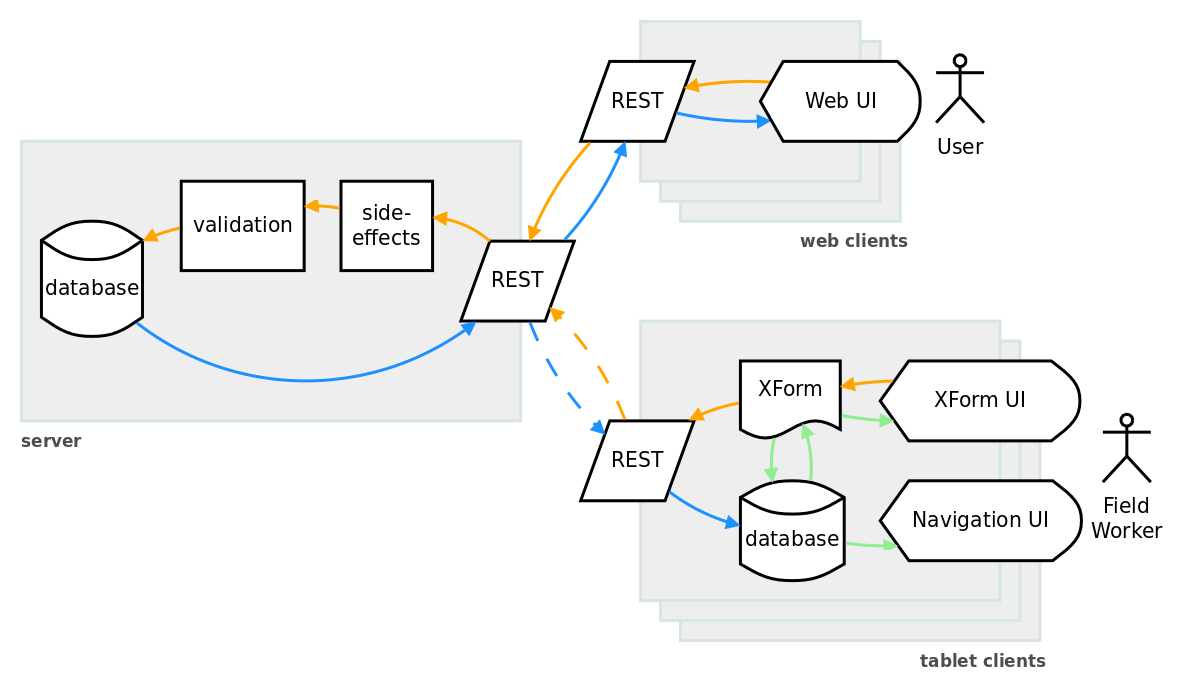

This was my computer science master’s research project and thesis work, at the University of Southern Maine. We provided software and tech support to an ongoing public health intervention in Equatorial Guinea, aimed at administering and studying a malaria vaccine.

The project required offline data collection in areas without Internet connectivity. This presented challenges for data collection and synchronization.

My work included contributions to an existing, deployed information system called OpenHDS written with Java, Spring, Hibernate, Andoid and SQLite. My thesis included reflections on lessons learned from OpenHDS and the design of a revised system, the “PODS OpenHDS System”, that addressed some of the challenges of offline data collection, aggregation, syncing to mobile devices, online web administration, and interoperability between mobile applications.

Tower of Psych and Snow Dots

I started the Tower of Psych and Snow Dots open-source projects while I was an employee at the University of Pennsylvania and continued them while contracting at the University of Washington with support from the Howard Hughes Medical Institute.

These projects aimed to facilitate human psychophysics research (i.e. repeatable video games with data collection).

One design goal was to implement experiment flow control and data collection using MATLAB, since this would be the same language used for data analysis. This presented some design challenges around the single-threaded MATLAB runtime and scheduling uncertainty from general-purpose operating systems and hardware. We called this flow control component Tower of Psych.

We made an opinionated separate between Tower of Psych vs Snow Dots, with Snow Dots encapsulating many lower-level details. Snow Dots included C functions to integrate MATLAB with OpenGL graphics (based on MGL, as mentioned above), USB HID devices, for data collection and UDP network sockets for connecting machines that made up the experiment rigs.

These projects were used by more than one research lab, generated more than one peer reviewed publication, and was used for over a decade.